… I’ve looked at clouds from both sides now

From up and down and still somehow

It’s cloud illusions I recall

I really don’t know clouds at all

–Joni Mitchell

Fifteen years ago Netflix embarked on its successful migration to the cloud, yet many are still struggling to duplicate this feat. The benefits of agility, scalability, reliability, and reduced cost are compelling, but the path to achieving them is still not well understood.

Many organizations continue to report significant failures of cloud migration projects. Long lists of cloud provider services and Cloud Native Computing Foundation projects are regularly published to highlight the shortage of staff with expertise and skills in such technologies and processes.

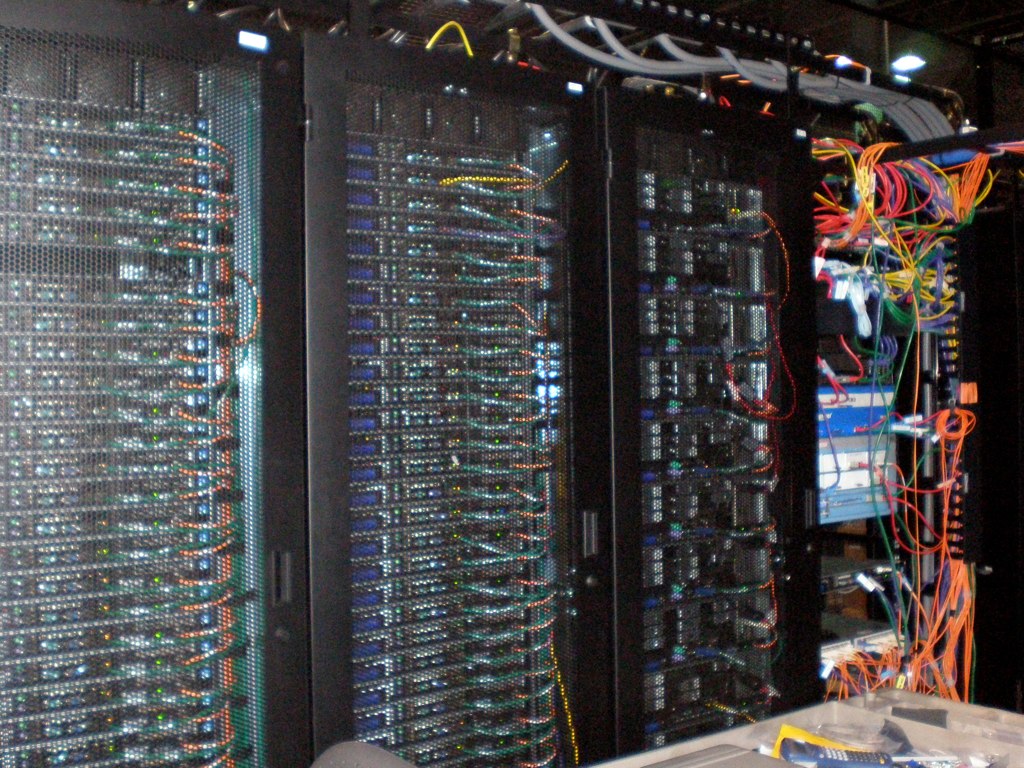

Why is it so hard to repeat Netflix’s success? Isn’t it all just a bunch of computers, hosted in the cloud provider’s data center? As they say, the devil is in the details, but in this particular case some of the most important details can be hard to see.

Why is the cloud different?

From a distance, the cloud looks like one big computer, just like what you have in a traditional IT data center. But if you look up close, you can see that cloud data centers are composed of hundreds and thousands of smaller, consumer grade computers networked together.

The “clouds” of consumer grade computers are capable of running the same workloads as traditional large enterprise servers, but offer better reliability, scalability, agility, and lower cost (although costs need to be managed carefully).

You can certainly run your existing applications in the cloud—you can “lift ‘n’ shift.” But you won’t get the added benefits unless you re-engineer.

So something must be different, right? And it matters because it’s not usually a simple task to re-engineer traditional enterprise applications to obtain all the benefits of cloud computing.

Hardware component failure rates

The big difference people most often don’t see in the details is the significant difference at the hardware level. After decades of the hardware layer providing more or less the same abstractions to the programming language and data layers, it’s often easy to lose track of the impact on applications of hardware level differences.

Consumer grade hardware components have significantly higher failure rates than enterprise grade hardware components. It seems like the higher component failure rates should cause a lot of incidents and outages, since that’s what happens when enterprise hardware components fail in the traditional data center.

This is the magic of the cloud – and the key difference when you look at it up close—consumer grade components are cheap enough that they can be deployed redundantly without increasing the overall cost of hardware. (In fact hardware costs are typically much lower in the cloud.) Whenever a component fails, such as a disk, CPU, memory array, or network card, another component is instantly available to take its place on another computer. And new computers can be seamlessly put in place of the failed ones, while applications keep on running.

Hardware failures in the cloud are handled by replication, of both processing and data (and as the old saying goes, data processing (now more typically called IT) consists mainly of data and processing). Whenever any single hardware component fails, another one is standing by to immediately take its place. Whenever a single data processing node fails, another one is standing by to take its place.

Sounds simple enough, doesn’t it? But enterprise applications are not written to work this way. More typically they are monoliths using a single instance of application code and a single, shared database running on a specific machine (or specific set of machines). When one of those machines fails, it usually creates an outage (at least partial) or an incident,

This is where the well known “cattle vs pets” analogy comes in. In the traditional enterprise world, servers are named and managed like pets. It’s treated like an unexpected tragedy when one of them dies.

In the cloud, servers are anonymous and are managed like herds of cattle. The death of a single animal is treated as a normal event and can easily be replaced to maintain the size of the herd.

What does it mean for the application layer?

Applications running on components with high failure rates need to have a redundant deployment of code and data ready and available to take over on a moment’s notice when the HW they’re running on fails. And there’s the rub.

Typical enterprise IT applications are not designed for this. They are designed on the assumption that the enterprise grade hardware components do not fail, or at least not often enough to cause significant problems.

In cloud computing, frequent hardware component failure is assumed, and systems are designed to support this assumption. When a hardware component fails, the cloud just switches traffic to a replica. That is why microservices based architectures are popular. It’s easier to replicate smaller units of code, and better to isolate failures to a smaller unit of code and data (a best practice for microservices is for each microservice to own its own data).

In enterprise IT, failure is treated as an exceptional condition to be eliminated. Normal operations grind to a halt until the problem is fixed and normal operations are restored. Afterwards, significant time and effort are poured into root cause analysis and remediation – trying to ensure the application is updated so that such a failure never occurs again.

Enterprise IT hardware manufacturers put a lot of time and effort into reducing the failure rates of their components. And they have made a lot of progress over the years. So much so that system and application failures are less common than ever.

But engineering and manufacturing to reduce failure is expensive. It’s actually cheaper to buy consumer grade components that fail more often, and build failure tolerant systems and applications on top of them.

(I’m starting to think the computer industry has been hallucinating all this time, thinking they could actually one day eliminate all failures in systems composed of fragile electronic devices connected via unreliable networks and managed by fat fingered operators running buggy software.)

The data challenge

On the database side, where the data is stored and from which it must be retrieved, it’s critically important to understand what is the latest state, or the correct state of a core business artifact such as a bank account or shopping cart. No one wants to get critical information wrong, or get out of date information.

Because failures can happen at any time, a common solution is to replicate data, as previously mentioned. If one machine (or component of the machine) fails, another machine can take its place. But updates to data on multiple machines is not instantaneous, because of network lag and processing time. How do we know which version of multiple versions of the data is correct?

This is perhaps the biggest challenge of the cloud: how to achieve transactional consistency in the face of constant HW component failures and replicated data. A lot of industry innovation and product development has occurred in the database space: NoSQL, document data stores, eventual consistency stores, data sharding, cached data, transactional compensation mechanisms such as SAGAs, and so on.

The industry is working hard to catch up to the level of features, functions, and capabilities at the database level that the traditional IT environments have enjoyed for many years, and at the same time providing a multiplicity of options for varying data storage and retrieval options that support cloud computing benefits. Much of this still remains in the realm of the application today, however, for cloud systems, and handling transactional consistency at the application level can often throw you for a loop.

The biggest challenge in all of this tends to be correctly figuring out which parts of the problem have to be solved at the application layer vs the software layer to achieve the transition from stateful interactions with data (typical for traditional enterprise applications) to stateless interactions with data (typical for cloud native applications). How and where to draw this line is constantly changing due to the continued evolution of cloud computing standards and innovative new database technologies.

Why does this matter? And more importantly, how?

Let’s review the ways in which computing in the cloud is different from computing in the enterprise data center:

- It’s delivered as a service—there is no operations department. Developers use APIs to provision needed infrastructure, and it can be done on demand.

- Cloud infrastructure is scalable and elastic—it’s easy to add or reduce capacity dynamically. No more building new data centers as demand increases or sizing for peak loads and having excess capacity sitting around unused.

- Cloud infrastructure is highly reliable and available—it has built in redundancy and failover mechanisms to ensure that apps are “always on.” Enterprise systems have to be duplicated entirely for COB purposes and that’s a huge additional expense if you want to do the same as what the cloud offers as a built-in capability.

- Cloud computing is highly automated, not only provisioning of infrastructure using APIs but also deploying application updates rapidly and automatically managing systems. Enterprise IT systems typically rely on manual operations to deploy applications and manage systems.

All of these benefits can be achieved, but only by rewriting traditional applications that are impacted by the failure of a single server, or of a significant HW component. And this means application developers have to understand more of the details of how the hardware systems work than they used to, and draw the line differently between what the application is responsible for and what the system software (i.e. database software) provides.

The Intellyx Take

The benefits of cloud computing are numerous and well understood. Achieving them however depends on taking a close look at cloud infrastructure, how it works to handle hardware failure, and understanding what needs to be done to rework existing enterprise applications to handle failure scenarios while maintaining transactional consistency.

In particular, this means figuring out how to successfully re-engineer for a stateless application architecture, and being able to gracefully resolve the problem of making independent updates to replicated data.

Solving these challenges is a continuous process, and many vendors continue to work to improve the solutions in these areas.

As far as I’m concerned, this is pretty much just a matter of developing technology at the right level of abstraction where everyone can easily understand them and know what to do, and it’s bound to happen. After all, not everyone can afford to hire the best software engineers, and pay top salaries for them, the way Netflix did.

©2023 Intellyx LLC. Intellyx is editorially responsible for the content of this document, and no AI chatbots were used to write it. Image source: Sean Ellis, Wikimedia commons under CC2.0 license.