An Intellyx BrainBlog, for Openstream.AI

An Intellyx BrainBlog, for Openstream.AI

What happens inside the mind of the world’s greatest human customer service rep (CSR)?

Putting aside that fact that our brains don’t operate in a code-driven, observable way like computers, we can make some assumptions about the thought processes of an extremely effective CSR:

- They would learn from observation and experience about a variety of customer problems from thousands of past help sessions to intuitively predict likely solutions.

- They would have developed an encyclopedic knowledge of domain-specific details about what data contributes to a given issue, and know about workarounds and references when looking to fill gaps in this knowledge.

- They would interact with customers with understanding and empathy, making them feel listened to as if finding solutions to their problems were genuinely a top priority.

When a CSR is ‘in the zone,’ they are routinely applying all three of these thought processes at the same time with every conversation, not just for delivering faster answers, but better customer outcomes.

Of course, cultivating and retaining enough human CSRs who are so tuned-in is nearly impossible for most companies. No matter what compensation and perks are offered to these highly valued employees, customer service is an inherently stressful job. The focus and performance of a CSR team may therefore be inconsistent throughout the day or week, and turnover is high in these positions.

That’s why innovative companies are turning to AI-based knowledge creation, allowing systems to augment the customer service group’s capabilities with responsive and understanding intelligent agents.

Compressing comprehension time

The primary constraint to scaling excellent customer service is a human talent shortage. That’s why organizations must get human agents up to speed on the specifics of their businesses as quickly as possible to productively help customers for the time they are there.

This is easier said than done. Take for instance a healthcare scenario – the world’s best CSR employee could not possibly read and retain the current details of each plan, much less the specifics of individual customer policies in toto – even if they tried.

With experience, that CSR will build up a specific knowledge domain for the healthcare industry they work in. Over time, they learn how certain customer sentiments and statements provide guideposts, allowing them to spot similar tendencies and probable solutions for healthcare customer issues.

Virtual agents are a natural fit to augment the intelligence of human CSRs. When a customer calls in, an AI-based agent could read a subscriber’s complete coverage profile in moments, and interpret and map the customer’s query to that context and come up with many more possible options and outcomes.

The AI-based agent can bring together records of all of the text messages, software requests and responses, and voice conversations that touched a specific customer, which can be associated with metadata labels and conveyed to the CSR in an assistive mode if desired.

The processing of so much text data, and the recognition of voice in a conversation might be considered intelligent automation, but it really goes deeper than simply capturing a series of conversation flows. The hard part is negotiating unknown customer edge cases and probable responses, when no two conversations are exactly the same.

We’re looking toward building a knowledge domain for the AI agent, so it can be much more than a stand-in for the CSR in front of customers.

Understanding customer intentions

Human customers don’t follow a script when they ask for help. In fact, they may not even articulate their goals using conventional questions at all.

A human CSR gets better over time at recognizing when things like statements and short utterances, and non-verbal cues such as an eye roll or scowl represent a problem for the customer.

Openstream.ai has built an assistive AI with just such an ability to interpret customer goals within a conversation, marrying that with a deep industry-specific knowledge domain.

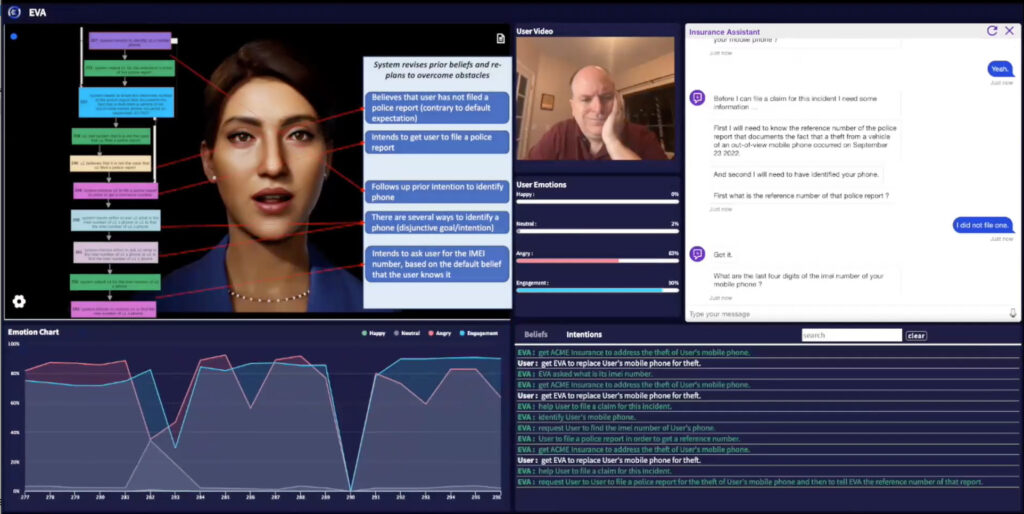

In an insurance industry demo, their Eva intelligent agent is pictured as a customer-facing avatar from an administrator’s point of view, with the face of the virtual agent and a human customer, flanked by a huge data graph of captured conversational and sentiment elements that changes on the fly along with the flow of conversation.

When the customer utters “My phone is stolen” on the video chat, that is a statement, not a question or request. But the Eva AI agent understands the negative condition of the stolen phone, and the customer’s facial expression signifying a sentiment of frustration.

As Eva starts building up a plan to change that negative state, she doesn’t waste time asking for more details yet, but by asking about potential obstacles. “Did you file a police report?” The AI has the domain knowledge to understand that without a police report, the device couldn’t be reported to the phone’s insurance provider as stolen.

The customer says no, leaves the conversation and files a report. When he logs back into the help session with a police incident number, Eva returns with the original conversation plan, loaded up with preconditions. This avoids a frustration typical of larger call centers, where a customer will not likely get the same CSR twice.

This goal-oriented dialogue continues, but just as importantly, Eva’s on-screen avatar also makes facial expressions to convey empathy, concentration or relief based on the customer’s facial and tone cues at the right times in the conversation, leading to a more satisfactory customer experience alongside a satisfying conclusion.

Explaining why with explainable AI

A meaningful conversation doesn’t just gauge and predict the likely sentiment of the customer, it also allows the AI chatbot to self-report its own intentions when making expressions or statements.

What seems like an expression of sympathy can instead look like a microaggression to a human customer if the timing of a facial response is off, or if the language seems ironic or clueless to its context.

An explainable AI solution may show the inference source code or ML training data that created a decision or prediction. But explainability can happen within a conversation.

The customer above utters the one-word question “Why?” when asked for the police report. Eva tells him “We’re asking because we need the police report number to file a claim to replace a stolen phone.”

This explainability can be backward-chained, i.e. reporting on past events in the conversation or the initial aggregation of the AI graph that lead to this point in time, or forward-looking as above, stating the intentional goals or probabilities of outcomes when explaining the reasoning for a decision.

The Intellyx Take

Futurists like to talk about the “uncanny valley” between early chatbots and the development of conversational AI that is so believable that humans cannot even sense that they aren’t talking to another human.

While that makes for a fascinating thought experiment, the point of an intelligent virtual CSR is not to “fool” the human customer, but to deliver better customer outcomes by building up a specialized knowledge domain that understands the customer goals and obstacles of the industry theater it is deployed in.

Read the article on the Openstream blog here >

©2022 Intellyx LLC. Intellyx is editorially responsible for the content of this document. At the time of writing, Openstream.ai is an Intellyx client. Image sources: Sophiaaa, Simplr, flickr CC 2.0 open source; Openstream.ai conversation demo screen.