In the first three posts in this four-post blog series, my colleague Charles Araujo and I have explored the challenges of the shared responsibility model in the context of hybrid IT.

In spite of the complex technologies involved, the challenges we have been pointing out have largely been organizational – the human side of dealing with complex, dynamic technology environments in the face of shifting enterprise business priorities.

One of the primary architectural tools we have for bridging the technological and organizational worlds is the power of abstraction. Cloud computing is itself a collection of abstractions, separating the underlying implementation decisions of the cloud provider from the ease of use benefits that inure to the cloud customer, while forming the technical underpinnings of the shared responsibility model.

And yet, while we can focus our attention on the flexibility and simplicity that the abstractions of cloud computing afford us, we must also realize that technology is at the core of everything we do in the hybrid IT context.

Drilling Down into Traffic Flows

We must understand the realities of such technology in whatever level of detail we require in order to insure our complex environments meet our business needs in a flexible, secure manner.

As we drill down into these levels of detail, however, we soon reach a limit, as cloud providers must draw a line that customers cannot cross, at risk of customers interfering with each other in an inherently multitenant environment.

One important example: flow logs. Flow logs are a feature that Amazon AWS, Microsoft Azure, and other public cloud providers offer that capture information about Internet Protocol (IP) traffic flowing to and from network interfaces in each respective cloud environment.

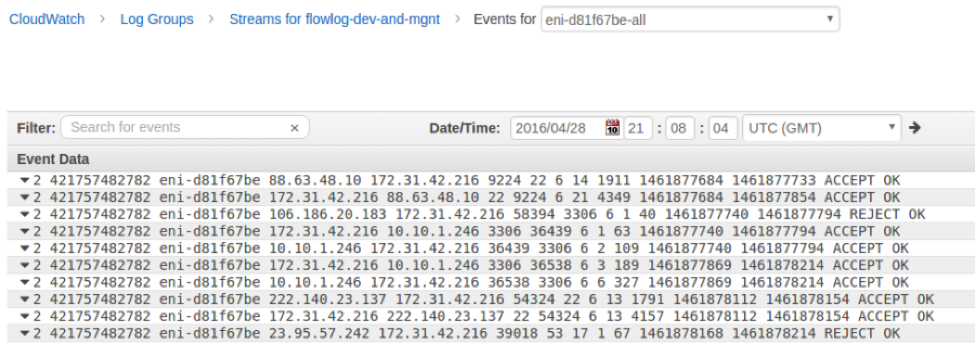

Flow logs consist of a particular set of metadata (not the data payload itself), including the source, destination, and protocol for an IP flow. Examples of AWS flow log records appear in the screenshot below.

Sample Flow Log Records (Source: Amazon.com)

There are variations among the different providers’ flow logs, both in terms of the specific metadata they include as well as the format – Azure delivers them in JSON, while AWS provides each record as a space-delimited string (i.e., a typical log file record) that the user would need to parse or format as appropriate.

Users can create flow logs for multiple purposes, for example, for a virtual private cloud (VPC), a subnet, or a network interface – including network interfaces that other cloud services may create. In the case of AWS, users can create flow logs for Elastic Load Balancing, Amazon RDS, Amazon ElastiCache, Amazon Redshift, and Amazon WorkSpaces.

The Use Cases for Flow Logs

Flow logs give ops/DevOps users visibility into normal traffic patterns as well as the ability to contrast anomalous patterns with normal ones.

However, the most important uses for flow logs center on security. Because flow logs contain metadata about IPs, ports, and protocols, they give security analysts the ability to differentiate between legitimate and unauthorized traffic.

They also provide sufficient information for analysts to compare traffic metadata with blacklists in order to identify known malicious sources of traffic.

However, flow logs have a significant limitation. Because of the fundamental multitenant architecture of public clouds, flow logs cannot provide visibility into flows at the packet level. Furthermore, flow logs are inadequate for any use case that requires content inspection.

Security analysts in particular require more than flow metadata to do their jobs properly. They also require full-packet network data, as such information is essential for intrusion detection, data loss prevention, and malware analysis – and in fact, any task that requires content inspection.

Ops management personnel also require this level of access to network data in order to ensure high levels of network performance, as visibility into full-packet network data is necessary for insight into the data that workloads exchange between them – an essential element of hybrid IT architectures.

The Intellyx Take

There is an old saying that with great power comes great responsibility. In fact, the relationship between the two works both ways, as to achieve great responsibility, one must also have great power.

Such is the nature of the cloud’s shared responsibility model. The only reason we are discussing this model in the first place is because the underlying technology is so powerful that it makes such discussions relevant.

The original saying, however, still applies: to achieve the full power of the cloud’s technology, we must be fully responsible at all levels for the technology.

Such is the lesson of important tools like the Gigamon Visibility Platform for AWS, which provides packet-level visibility into data in motion in the cloud. This platform gives security analysts the power to take control of network performance, behavior, and security, beyond what cloud providers are able to deliver with flow logs.

As organizations implement increasingly complicated and diverse hybrid IT architectures, furthermore, the visibility such tools provide becomes increasingly important for ensuring seamless, secure workload portability and interactions in such environments.

Copyright © Intellyx LLC. Gigamon is an Intellyx client. Intellyx retains full editorial control over the content of this paper.