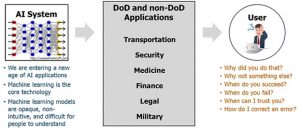

Despite its promise, the growing field of Artificial Intelligence (AI) is experiencing a variety of growing pains. In addition to the problem of bias I discussed in a previous article, there is also the ‘black box’ problem: if people don’t know how AI comes up with its decisions, they won’t trust it.

In fact, this lack of trust was at the heart of many failures of one of the best-known AI efforts: IBM Watson – in particular, Watson for Oncology.

In fact, this lack of trust was at the heart of many failures of one of the best-known AI efforts: IBM Watson – in particular, Watson for Oncology.

Experts were quick to single out the problem. “IBM’s attempt to promote its supercomputer programme to cancer doctors (Watson for Oncology) was a PR disaster,” says Vyacheslav Polonski, PhD, UX researcher for Google and founder of Avantgarde Analytics. “The problem with Watson for Oncology was that doctors simply didn’t trust it.”

When Watson’s results agreed with physicians, it provided confirmation, but didn’t help reach a diagnosis. When Watson didn’t agree, then physicians simply thought it was wrong.

If the doctors knew how it came to its conclusions, the results might have been different. “AI’s decision-making process is usually too difficult for most people to understand,” Polonski continues. “And interacting with something we don’t understand can cause anxiety and make us feel like we’re losing control.”

The Need for Explainability

If oncologists had understood how Watson had come up with its answers – what the industry refers to as ‘explainability’ – their trust level may have been higher.

Read the entire article at https://www.forbes.com/sites/jasonbloomberg/2018/09/16/dont-trust-artificial-intelligence-time-to-open-the-ai-black-box/.

Intellyx publishes the Agile Digital Transformation Roadmap poster, advises companies on their digital transformation initiatives, and helps vendors communicate their agility stories. As of the time of writing, IBM is an Intellyx customer. None of the other organizations mentioned in this article are Intellyx customers. Image credit: DARPA. The use of this image is not intended to state or imply the endorsement by DARPA, the Department of Defense (DoD) or any DARPA employee of a product, service or non-Federal entity.